pomdp

Partially observable Markov Decision Processes

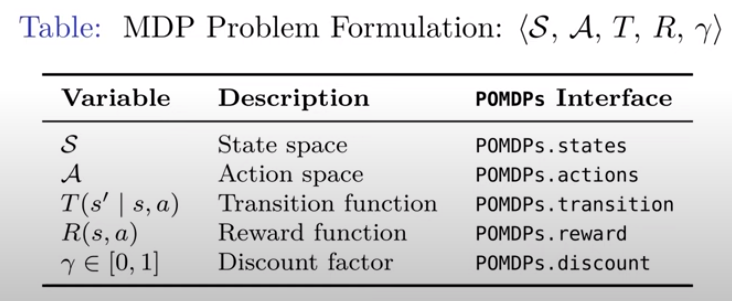

A problem formulation that enable optimal sequential decisions to be made in uncertain environments (Intro to POMDPs.jl course)

Abstractions are general to [[decision-theory]]: agent makes decisions based on current state , choosing action that is motivated to maximize the expected reward according to a policy

Types of MDP solvers

- Discrete value iteration

- Local approximation value iteration

- Global approximation value iteration

- Monte Carlo tree search

Notes on the Julia implementation, POMDP.jl